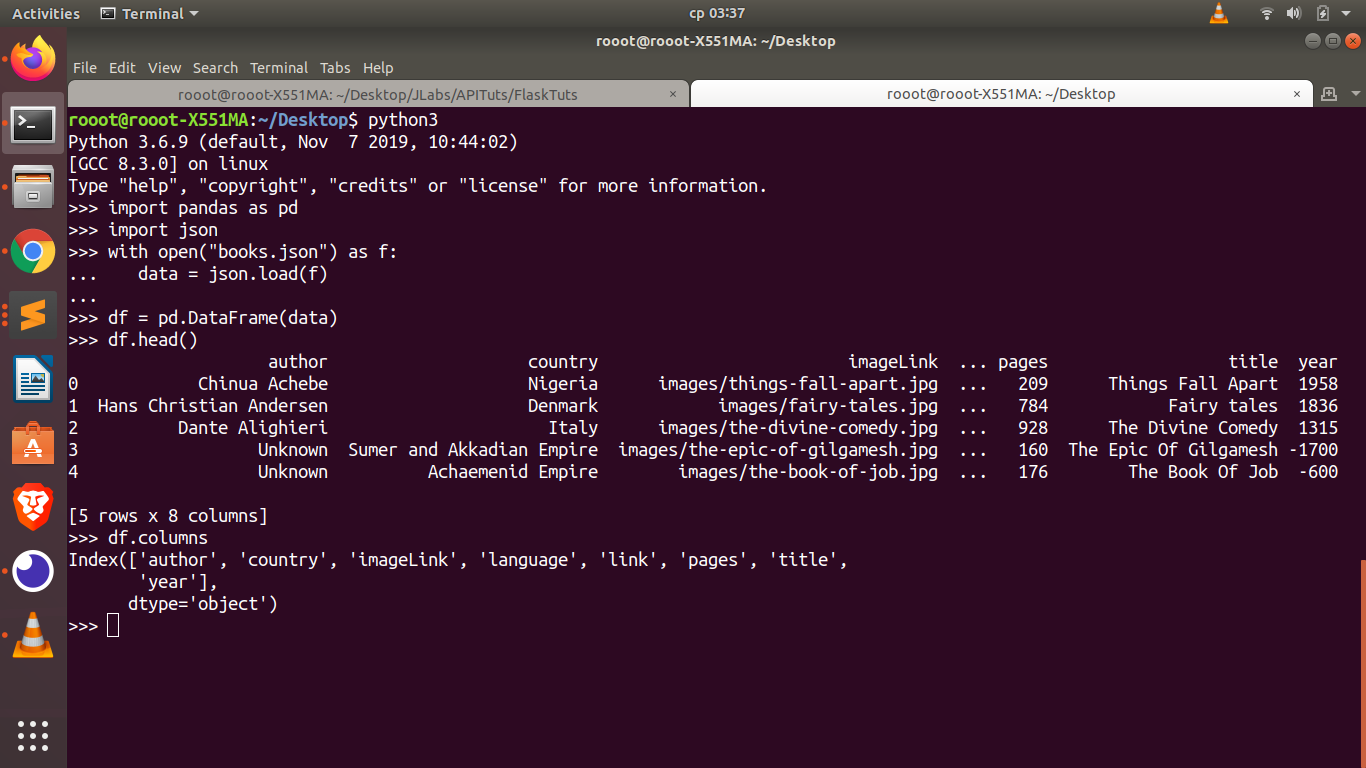

The CDC JSON data isn’t quite in the right shape for our purposes:

#Sqlite json download

We’re going to run a join from that CSV data to that JSON data, and output a table of results.įirst, we need to download the files.

The CDC have an undocumented JSON endpoint (which I’ve been archiving here) tracking the progress of vaccination across different states. The New York Times publish a us-states.csv file with Covid cases and deaths by state over time.

(I believe size here is measured in kilobytes: the GitHub API documentation isn’t clear on this point.) Joining across different filesĪll of these examples have worked with JSON data piped into the tool-but you can also pass one or more files, of different formats, in a way that lets you execute joins against them.Īs an example, let’s combine two sources of data. Select sum(size), sum(stargazers_count) from stdin We can run aggregate queries too-let’s add up the total size and total number of stars across all of those repositories: $ curl -s '' \ If I wanted to generate a LaTeX table of the top repos by stars I could do this: $ curl -s '' \ The -t option supports a number of different formats, specified using -fmt. Or we can use -csv to get back CSV: $ curl -s '' \ The -t option here means “output as a formatted table”-without that option we get JSON: $ curl -s '' \ The query selects three of the JSON properties, renames stargazers_count to stars, sorts by stars and return the first three. Stdin is the temporary table created for the data piped in to the tool. Select full_name, forks_count, stargazers_count as stars GitHub make those available via an authentication-optional API endpoint at -which returns JSON that looks like this (simplified): My Dogsheep GitHub organization has a number of repositories. It can import data, execute SQL and output the result in a one-liner, without needing any temporary database files along the way.

#Sqlite json full

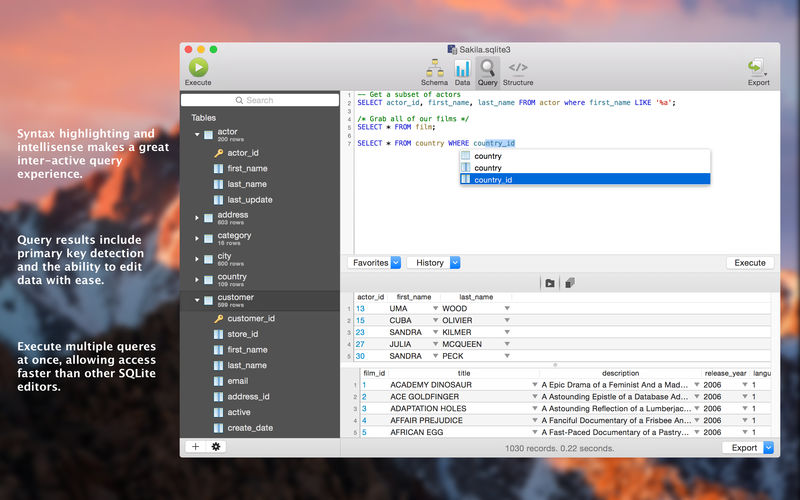

The new sqlite-utils memory command ( full documentation here) operates against a temporary, in-memory SQLite database. This week I realized that I had most of the pieces in place to reduce this to a single step. Using SQL to re-shape data is really useful-since sqlite-utils can output in multiple different formats, I frequently find myself loading in a CSV file and exporting it back out as JSON, or vice-versa. Processing data with this involves two steps: first import it into a temp.db file, then use sqlite-utils query to run queries and output the results. Sqlite-utils already offers a mechanism for importing CSV and JSON data into a SQLite database file, in the form of the sqlite-utils insert command. I’ve recorded this video demonstrating the new feature-with full accompanying notes below.

#Sqlite json install

You can install it using brew install sqlite-utils or pip install sqlite-utils. The new feature is part of sqlite-utils 3.10, which I released this morning.

The new sqlite-utils memory command can import CSV and JSON data directly into an in-memory SQLite database, combine and query it using SQL and output the results as CSV, JSON or various other formats of plain text tables.

If you want to build some query helpers, you can make a struct that implements the clause.Joining CSV and JSON data with an in-memory SQLite database Scan scan value into Jsonb, implements sql.Scanner interfaceįunc (j *JSON) Scan(value interfaceĭb.AddError(errors.New( "invalid encryption key"))

#Sqlite json how to

The customized data type has to implement the Scanner and Valuer interfaces, so GORM knowns to how to receive/save it into the database GORM provides few interfaces that allow users to define well-supported customized data types for GORM, takes json as an example Implements Customized Data Type Scanner / Valuer

0 kommentar(er)

0 kommentar(er)